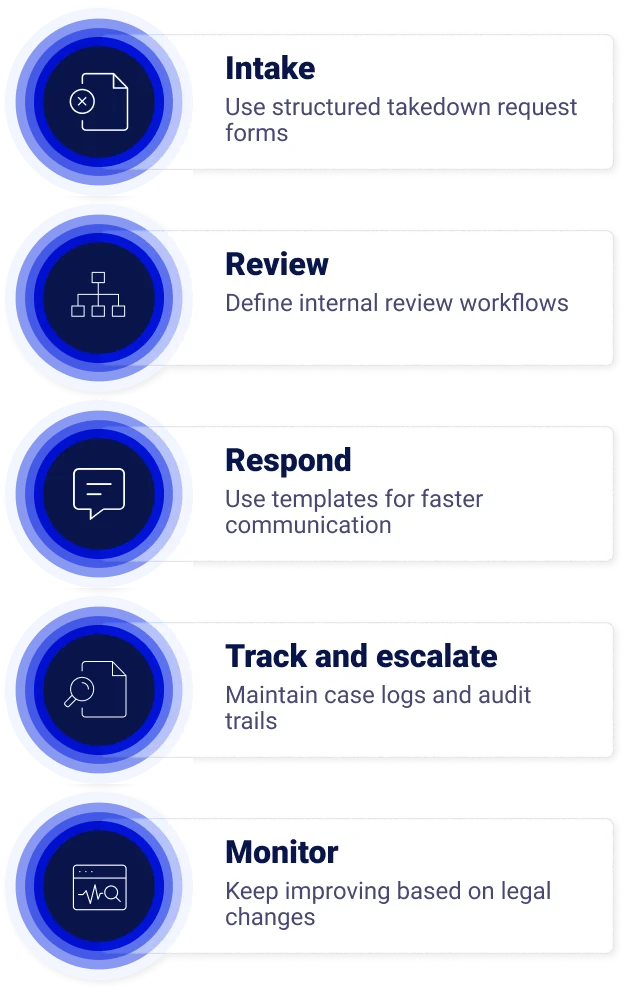

How to Build a Takedown Request Workflow: Step-by-Step Guide for Businesses

Introduction

Online content removal isn't just a policy choice; it’s a legal requirement in many jurisdictions. From copyright violations to harmful or illegal content, businesses must develop takedown workflows that align with evolving laws like the EU Digital Services Act (DSA), U.S. DMCA, and Australia’s Online Safety Act.

In this step-by-step guide, we walk you through how to set up a takedown request workflow that supports timely handling, consistent documentation, and built-in response processes so your business can handle requests in a structured and responsible way.

Why businesses need a takedown request workflow

Content takedown obligations are becoming standard globally. A strong workflow helps:

- Respond to notices within required timeframes

- Reduce legal risk across jurisdictions

- Protect users from harmful, illegal, or unauthorized content

- Manage and track cases through their full lifecycle

Without a structured workflow, businesses risk missing regulatory deadlines, failing audits, and damaging trust with regulators, users, and partners.

Step 1: Intake — Use structured takedown request forms

A takedown workflow starts with the right intake. Regulatory frameworks like the EU Digital Services Act (DSA) require businesses to provide clear ways for users to submit complaints about illegal content. Similarly, the DMCA mandates specific language in copyright takedown notices.

What to include in your form:

- Who is submitting the request

- Link(s) to the content in question

- Reason for the request (e.g. copyright, harm, misinformation)

- Supporting evidence or explanation

- Consent to share data for investigation

Incomplete or vague intake forms can lead to delays, rejected requests, and legal exposure if critical information is missing.

Step 2: Review — Define internal review workflows

Once a request is received, assign it to a reviewer or team for evaluation. The Online Safety Act (Australia) requires digital services to act rapidly on harmful content, within 24 hours in some cases. The DSA also introduces obligations to assess the legality of content and issue decisions in a timely manner.

Helpful additions:

- Automated notifications

- Assigned case owners

- Pre-set deadlines based on jurisdiction

Clear review paths allow requests to be handled consistently, reduce human error, and help meet strict regional deadlines like the DSA and Australia’s Online Safety Act.

Step 3: Respond — Use templates for faster communication

Under the DSA, users must be notified of the outcome of their notice (including reasons for rejections). Similarly, the DMCA requires acknowledgment of receipt and may involve counter-notice procedures if content is restored.

Create response templates for:

- Acknowledgment of receipt

- Acceptance and timeline for removal

- Rejection with explanation

- Requests for clarification or evidence

Templated responses save time, support consistency, and help demonstrate fairness and transparency to regulators and users.

Step 4: Track and escalate — Maintain case logs and audit trails

Every takedown regulation requires some level of documentation. The DMCA recommends recordkeeping of notices and counter-notices. The DSA and Australia’s Online Safety Act emphasize accountability and timely action.

Your workflow should include:

- Case IDs and timestamps

- Notes from reviewers

- Escalation paths for edge cases

- Final outcomes and closure dates

Comprehensive logs and audit trails provide evidence of timely and consistent action, reducing risk in case of disputes or regulator audits.

Step 5: Evolve — Keep your takedown process legally up-to-date

Content moderation regulations are evolving quickly:

- The EU DSA mandates structured notice-and-action mechanisms.

- The DMCA defines takedown and counter-notice workflows specific to copyrighted content.

- Australia’s Online Safety Act introduces aggressive response deadlines (as short as 24 hours).

Best practices include:

- Track legal updates across all jurisdictions

- Adjust workflows regularly using modular tools

- Train teams on evolving legal obligations

- Use platforms like Clym to automate and stay aligned

Sticking to outdated processes can quickly put you out of step with evolving regulations, increasing the risk of penalties and reputational harm.

Takedown Workflow Checklist (Visual Aid)

[✓] Structured intake form with legal fields

[✓] Case assignment with regional awareness

[✓] Automated notifications and deadlines

[✓] Response templates for consistency

[✓] Escalation paths and final resolutions

[✓] Audit-ready logs and recordkeeping

[✓] Ongoing legal updates and retraining

How Clym helps businesses manage takedown requests

Clym’s content takedown solution includes:

- Structured, customizable intake forms

- Built-in workflows based on region or regulation

- Case tracking with audit trails

- Role-based user assignments

- Resolution templates and logging

- Integration with other tools in Clym’s compliance platform

Whether you’re responding to a DMCA notice, handling a DSA-flagged illegal post, or processing harmful content under Australia’s Online Safety Act, Clym provides the solution to support timely, consistent, and well-documented takedown processes.

Don’t risk fines or missed deadlines. Manage takedown requests effectively with Clym’s content takedown solution. Learn more about Content Takedown.

FAQs

A takedown request workflow is a structured process that allows businesses to receive, assess, respond to, and track content removal requests. It must align with global regulations such as the DMCA, EU Digital Services Act (DSA), and Australia’s Online Safety Act, and typically includes structured intake forms, role-based review assignments, notifications to users, and audit-ready case documentation.

Under the DMCA, service providers must acknowledge receipt of a valid takedown notice "expeditiously" and remove or disable access to the infringing content. If the content owner submits a counter-notice, the provider must wait 10–14 business days before restoring the content, unless legal proceedings are initiated.

Response times under Australia’s Online Safety Act vary by content type. For Class 1 content such as child sexual exploitation or terrorism material, removal is required within 24 hours. For other types of harmful content, the eSafety Commissioner may require removal within 2 business days of notification.

Yes. Under Article 17 of the EU Digital Services Act, platforms must notify both the person who submitted the notice and the content uploader of the outcome. Notifications must include the decision (removal or retention), the reasons behind it, and available options for redress, and they should be issued without undue delay.

A single workflow can support multiple legal frameworks only if it is configurable by region, content type, and response obligation. For example, some laws require acknowledgment within 24 hours, while others allow longer. Using a modular platform like Clym allows businesses to set workflows that respect local rules and legal timeframes.

Takedown requests may target content that is illegal, infringing, misleading, or harmful. This includes copyright-protected material (under the DMCA), hate speech or misinformation (under the DSA), and abusive or threatening content (under Australia’s Online Safety Act). Laws define the categories differently, so businesses should classify requests based on legal definitions in the applicable region.

The answer varies by jurisdiction. Under the DMCA, rights holders or their representatives may file notices. The EU DSA allows individuals or trusted flaggers to report content. Australia’s Online Safety Act accepts reports from users, parents, schools, and in some cases, the eSafety Commissioner itself.

Each framework has dispute processes. Under the DMCA, you may file a counter-notice if you believe the takedown was incorrect, and the provider may restore the content after 10–14 business days. Under the DSA, users can appeal content moderation decisions via internal complaint-handling mechanisms or out-of-court settlement options.

It’s important to actively monitor changes to global legislation, such as new obligations under the DSA or national amendments to the DMCA or Australia’s Online Safety Act. Businesses can adopt solutions like Clym that support modular configuration, regulatory coverage across regions, and timely updates as laws evolve.